Suppose a project contains two plugins, A and B, each containing one oscillator. A inputs to B, and B is connected to the output. The two oscillators have exactly the same frequency and appear to be in perfect sync. But if we stop FFRend in the debugger and examine the two oscillators, we'll find that they don't have the same value! A's oscillator is ahead by a small amount. This is because A is working ahead of B, i.e. A is operating on a frame that hasn't arrived at B yet. This unavoidable; it's how pipelines (or assembly lines) increase throughput. We need to careful when we say that A and B appear to be in sync. What we really mean is that A and B are in sync in the output! This the synchronization we care about. A and B should be out of sync when viewed from an external point of view (e.g. the debugger) because that's our proof that parallel processing is occurring.

However this necessary time shift means that complications can and do occur. For example, suppose we save the project. As currently coded, we're just copying the current values of oscillators to the project file. But the current values aren't all from the same time! In other words, the current value of A's parameter represents a state several frames ahead of B's state, which is several frames ahead of the output's state. Consequently, if we reopen the project, the oscillators won't be in sync in the output, despite the fact that they were in sync when the project was saved. The error occurs because we're violating an implicit assumption that all the values in the project file are from the same frame of reference.

To solve this problem, the save function needs to compensate the oscillator values for time shift. This should be doable because a) oscillators are periodic functions and can therefore be stepped in either direction with predictable results, and b) each plugin knows exactly how many frames ahead it is relative to the output state.

Note that this problem is unaffected by pause, i.e. the problem occurs regardless of whether the engine was paused when the project was saved. This is because pausing the engine doesn't reset the frame pipeline (unlike stopping the engine, which does); all the frames are frozen in mid-flight, so however far ahead a given plugin was when the engine was paused, it remains that way.

An additional, related problem was observed: inserting a plugin while the engine is running also causes oscillators to slip. Here the issue is even more subtle. The engine can only modify the plugin array while stopped, therefore inserting a plugin necessarily involves stopping and restarting the engine. However stopping the engine resets the pipeline, discarding any frames that were in progress but not yet output. Thus when the engine is restarted, all the plugins initially have the same frame of reference. But the oscillators still have the same states they had at the moment the engine stopped, when the plugins all had different frames of reference! Consequently there's now a mismatch between a plugin's oscillator states and what frame it's processing. The further from the output the plugin is, the larger this mismatch is, and the worse the slippage is.

This problem can be solved similarly to the one described above, by compensating each plugin's oscillators as needed in the case where the engine is restarting.

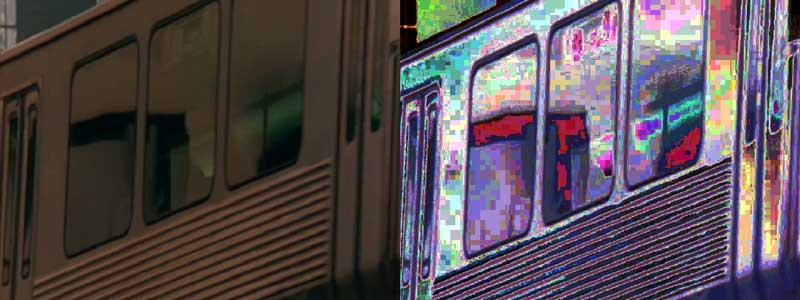

As previously mentioned, these problems took a long to show up, and even when they did it wasn't simple to verify them, because of time uncertainty. The most reliable tool for observing them was an invention I should have thought of long ago: a Freeframe plugin that simply writes its parameter to the input frame, as text. If you modulate the parameter with an oscillator, you're now stamping each output frame with an unambiguous record of how that oscillator's state changed over time. Most importantly, you're seeing the value the oscillator had when its parent plugin processed that frame, regardless of any time shifts due to pipeline backlog within the engine.

The plugin needs extra parameters for controlling the position of the text with the frame, so that different instances don't overwrite each other's text, but other than that it's totally straightforward. I'm thinking of making a similar plugin that draws the parameter as a waveform, like an oscilloscope.

Tests also show that even within a single plugin, oscillators may slip (become out of phase with each other), though the slippage is of a much lower order of magnitude. Whether they slip or not depends on the numerical relationship between their respective frequencies. For example:

Freq A Freq B Slippage

.1 .05 none

.1 .0125 none

.1 .0333333 .0002 after 2 million frames

This type of slippage is due to the use of floating-point in the oscillator, so there's probably not much to be done about it, but more research is needed.